:LATENT EMBEDDINGS

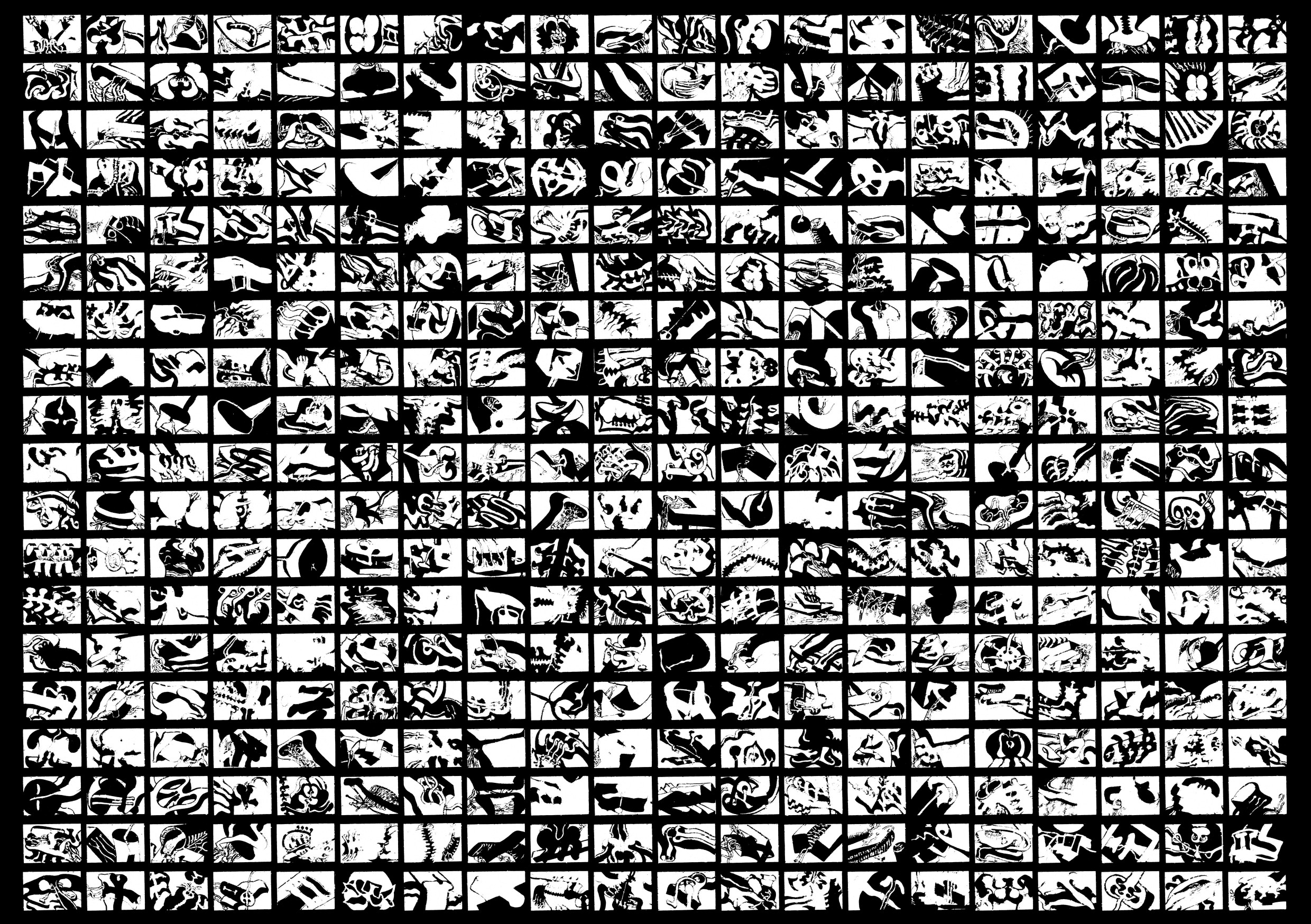

This project uses unsupervised machine learning techniques to revisit the animated film Continuous Sound and Image Moments, made in 1966 by Jeffrey Shaw, Willem Breuker, and Tjebbe van Tijen. The original film consisted of a sequence of hand drawings, each shown for only a few seconds.

In Latent Embeddings, digitized versions of those drawings are processed by a Vector Quantized Variational Autoeconder (VQ-VAE), an algorithm that uses variational inference techniques to construct a generative model. New images are then produced by exploring the latent space of the model.

These images resemble the hand-drawn originals to varying degrees. The artists control the extent of resemblance by constraining the algorithm either to remain close to the training dataset or to venture into more remote areas of the latent space, fluctuating between recognition and surprise, between memory and discovery.

In the physical installation, a set of computer-generated images are displayed on a monitor with a throttle bar that enables users to navigate them at any speed.

CREDITS Artists: Hector Rodriguez, Jeffrey Shaw and Tjebbe van Tijen Technical advisor: Mike Wong Programmer: Sam Chan